Having already disrupted art-making with DALL-E and writing with ChatGPT, OpenAI remains intent on putting its mark on the 3D modeling space.

In a recent paper from OpenAI, researchers Heewoo Jun and Alex Nichol outlined the development of Shap-E, a text-to-3D model that radically simplifies the means of generating 3D assets. It has the potential to upend the status quo in a range of industries including architecture, interior design, and gaming.

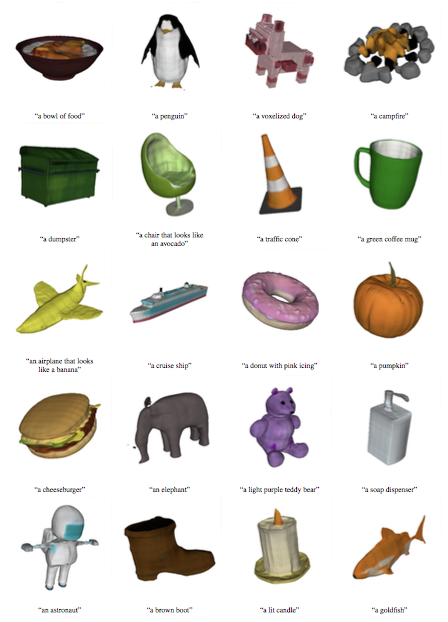

Selection of 3D samples generated by Shap-E. Photo: OpenAI.

Though still in the early stages of research and development, Shap-E will allow users to type in a text prompt that creates a 3D model, ones that can potentially be printed. Examples the researchers posted images of included “a traffic cone,” “a chair that looks like a tree,” and “an airplane that looks like a banana.”

At present, producing 3D models requires considerable expertise in industry-specific software programs, such as 3ds Max, Autodesk Maya, and Blender.

An airplane that looks like a banana. Gif: OpenAI

“We present Shap-E, a conditional generative model for 3D assets,” Jun and Nichol wrote in the paper Shap-E: Generating Conditional 3D Implicit Functions. “When trained on a large dataset of paired 3D and text data, our resulting models are capable of generating complex and diverse 3D assets in a matter of seconds.”

Shape-E is OpenAI’s second foray into 3D modelling and follows Point-E, the release of which in late 2022 coincided with ChatGPT, which monopolized media and consumer attention. Another reason for Point-E’s somewhat lackluster launch was the haphazard results it produced. While Shap-E’s renderings are yet to reach the quality of industry competitors, its speed is alarming with the open-source software requiring 13 seconds to produce an image from a text prompt.

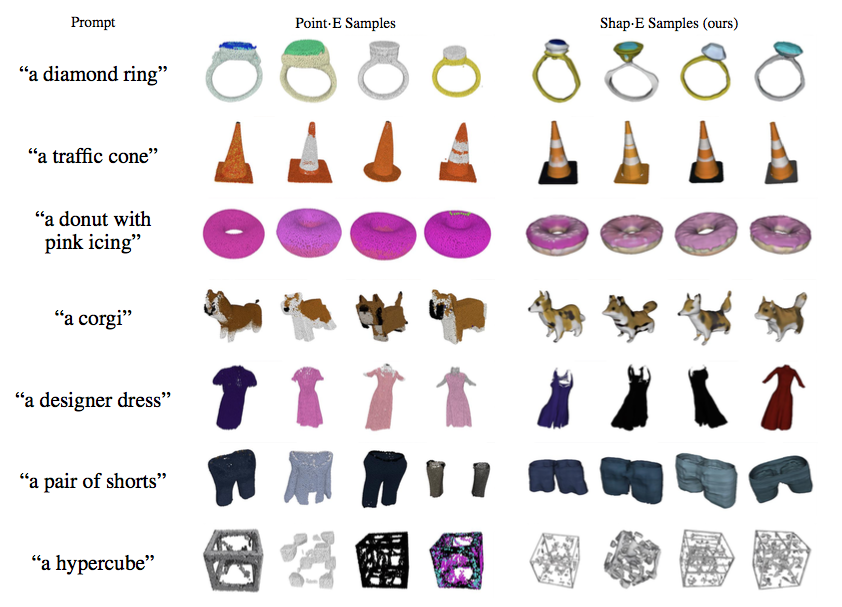

Comparison of Point-E and Shap-E images. Photo: OpenAI.

In addition to speed, Shap-E’s renderings have softer edges, clearer shadows, are less pixilated than its predecessor, and don’t rely on a reference image. The researchers said it “reaches comparable or better sample qualities despite modeling a higher dimension.”

At present, OpenAI continue to work on Shap-E with researchers noting that the rough results can be smoothed out using other 3D generative programs, though further finessing may require OpenAI working with larger, labeled 3D datasets. For now, 3D model enthusiasts can access files and instructions on Shap-E’s GitHub open-source page.

Follow Artnet News on Facebook:

Want to stay ahead of the art world? Subscribe to our newsletter to get the breaking news, eye-opening interviews, and incisive critical takes that drive the conversation forward.

Content retrieved from: https://news.artnet.com/art-world/openai-text-to-3d-model-shap-e-2302143.